Caching HTTPS

Shared Caching

The dilemma with shared caching of resources requested via HTTPS is that intermediate caching of end-to-end-encrypted content should not be possible, because it could thwart essential goals of encrypted communication:

- Serving encrypted content from a cache can oppose authenticity, because content can be delivered from a non-authoritative source as if it was authoritative. From a cryptographic point of view, serving the result of an earlier request as the result of a later request constitutes a replay-attack.

- Serving content from a cache that is shared by multiple clients can oppose privacy. For example, a web application that does not sufficiently control cache-refresh and cache-storage and does not sufficiently individually tag resource entities could let the cache store a private profile page retrieved by user A from URI /profile.php. Later, user B could request /profile.php, the request would be served from the cache, and B would gain access to private information of user A.

- Since caching can involve transformation of content and metadata, it can oppose integrity; a cached version of a resource can differ from the non-cached version.

Private Caching

Public caching being unavailable for HTTPS content, a lot of development effort is spent on implementing caching schemes that operate at the delivery side (using caching reverse proxies) or on the user agent side, involving caches maintained by the user agent. Alas, caching encrypted content is still problematic; content that is expected to be encrypted by the application really is not but instead resides in a cache, in decrypted form.

For example, unless specifically configured not to do so, the private caches of popular web browsers leave cached content in their disk storage in decrypted form. These resources are vulnerable to unauthorized access and manipulation.

Bypassing

Using PAC, user agents can be instructed not to use a caching proxy for any requests using the “https” schema. HTTPS communication is effectively excluded from caching.

Tunneling

A caching proxy can implement the HTTP method CONNECT. A user agent will use this method to establish a tunnel to a webserver using a HTTP session between client and cache, where the cache performs forwarding of raw data packets between client and authoritative server. Content is not cached, end-to-end encryption between user agent and authoritative server stays intact.

“SSL-Bumping”

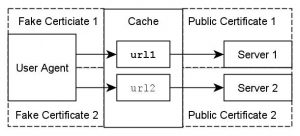

SSL-Bumping is a mode of operation of a proxy for HTTPS communication that involves terminating the end-to-end encryption from the authoritative webserver at the public-facing socket of the proxy. Internally, the proxy maintains the resources in decrypted form. For delivery of resources to clients, the proxy generates “fake” certificates for the authoritative websites, thus being able to impersonate the authoritative source of content towards the clients. Clients must be configured to trust the certificate authority used by the cache to sign these “fake” certificates for domain names of potentially external webservers.

SSL-bumping is very controversial. On one hand, it offers considerable benefits:

- The main advantage is that HTTPS resources can be subject to caching, transformation, filtering and redirecting by a shared cache operating as an SSL-Bumping proxy.

- An additional advantage is that doubious communication between clients and servers can be analyzed independently from the client or the server. This can be useful for upholding of policy, for security enhancement and forensic analysis.

But SSL-bumping also introduces serious problems:

- The biggest drawback is the manifestation of all the problems of shared caching of HTTPS content described above: With SSL-bmping in place, authenticity and privacy of any affected HTTPS communication are questionable, and applications relying on encryption of transmitted content are possibly vulnerable to unexpected security flaws.

- If the Proxy CA that issues the “fake” certificates is compromised, all HTTPS communication between clients and webservers is portentially compromised, because an attacker then can issue own certificates for any domain name, and clients which are configured to trust the “fake” CA would accept those certificates.

- An additional drawback is that this mode of operation breaks HTTPS communication that uses client certificates for client identification.

- Independent security measures on the client side can detect the operation of an SSL-bumping proxy and refuse communication.

With HTTPS not being cacheable without breaking TLS security, modern caching systems focus on caching resources at reverse proxies put in front of webservers as well as using HTTP cache semantics in ways that are optimized for private user agent caches. This tendency can lead to additional unexpected security flaws when encryption is disrupted and resources are exposed to shared caching.

[Nguyen 2019] provides additional discussion of the security implications of SSL/TLS interception and also provides partial alternatives from a security analysis point of view.